BotTalk has just released a new version code named SU-19. And here are the top features of this release.

DashBot Integration

Dashboat is the leading provider of "Actionable Bot Analytics". If you are a number cruncher like we are - those guys have everything you can dream about, including the live transcripts of your Alexa Skill interactions!

We are very proud to announce that as of today BotTalk supports DashBot out of the box. All you need to do is add the dashbot API key in your scenario settings:

scenario:

dashbot:

alexa: 'DASHBOT-API-KEY-GOES-HERE'

invocation: 'Test Crypto Demo'

name: 'Test Crypto Demo'

locale: en-US

category: EDUCATION_AND_REFERENCE

examplePhrases:

- 'Alexa, open Test Crypto Demo'

Couple of lines and you're in. And it works amazingly well:

set

Our DSL is evolving. We carefully study the feedback of the BotTalk developers and try to make the language more concise.

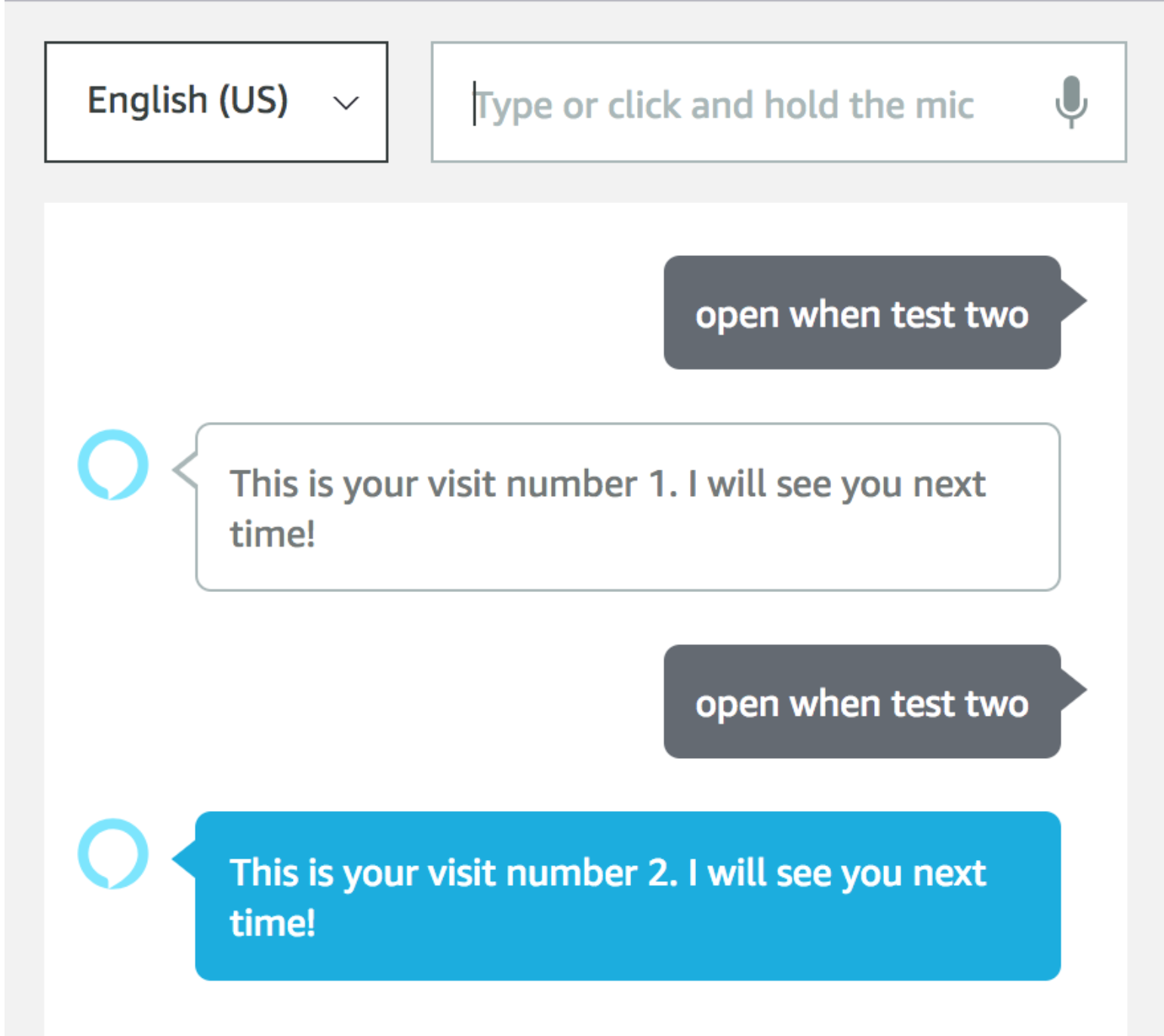

In this release we introduce the set action. This action is replacing the old setContext one.

While other platforms either don't have the concept of stored context variables, or rely on the third-party database solutions, in BotTalk you can store your variables with a one-liner:

- name: Initial Step

actions:

- set: 'visit_number = visit_number + 1'

next: Second Step

- name: Second Step

actions:

- sendText: 'This is your visit number {{ visit_number }}. '

next: Exit

In this scenario we use a context variable visit_number to count the number of times the user has visited our skill / action. BotTalk stores Context Variables between sessions, which allows you to access the variable visit_number every time the skill / action has been invoked:

reprompt

Often your user may be too slow to answer the assistant. It's a good practice to reprompt a user with the kind reminder - "Hey, here's what I need to know in order to continue".

For such cases we created reprompt action.

The text inside of the reprompt action will be played to the user if either of the following conditions are met:

- the assistant did not hear the user

- the user's response did not match the intent we're expecting in the next section

Consider the following example:

- name: Start of the Scenario Logic

actions:

- sendText: >

Let us start with the scenario logic right here!

Do you want to continue?

- reprompt: >

I don't think I got it quite right.

Do you want to continue with the game?

- getInput:

next:

yes_next: Start of the Scenario Logic

no_thanks: Exit

We start the step asking the user if she would like to continue the game. Then we put a reprompt action - just a kindly reminder about what we actually want to hear. For the case that the user either takes a long time to answer or answers our question with something other than 'Yes' or 'No'.

Your Feedback

You can study the details of the new features in our Documentation.

This release would not be possible without the insightful and detailed feedback from our users Sree and Alexey.

Do you want to request a feature or leave your feedback?

Thanks

The biggest thanks goes to the team of our programmers who made it happen!

We 💛 you all =)