Table of Contents

1. “Hello, world!” - it doesn’t take a lot to make an Assistant talk - sendText

2. Assistant asks it’s first Question - getInput

3. Understanding different ways Humans can say something - intents and utterances

4. What happens next - deciding what to do with the Answer

5. Write - Test - Repeat

6. Dealing with unexpected answers - when stupid Humans don’t do what they’re asked - *

7. Greet everyone! Introducing built-in slots

8. Greetings from space. Using custom slots

9. Break out of scripted flow! entrypoint: true

10. Curing Robot’s amnesia - remembering things with set

11. Contacting the outside word - making API requests with getUrl

12. A picture is worth 1000 words. Enhancing speech with visuals using sendCard

13. Trust Robots with making decisions - using compareContext and when

14. Deploying to Amazon and Google! Let’s go live!

“Hello, world!” - it doesn’t take a lot to make an Assistant talk - sendText

Almost every book on any programming language starts with this one. Let’s just put “Hello, World!” on the screen. But here is the funny part: we don’t want it to be on the screen. Instead, we want our Assistant to say it.

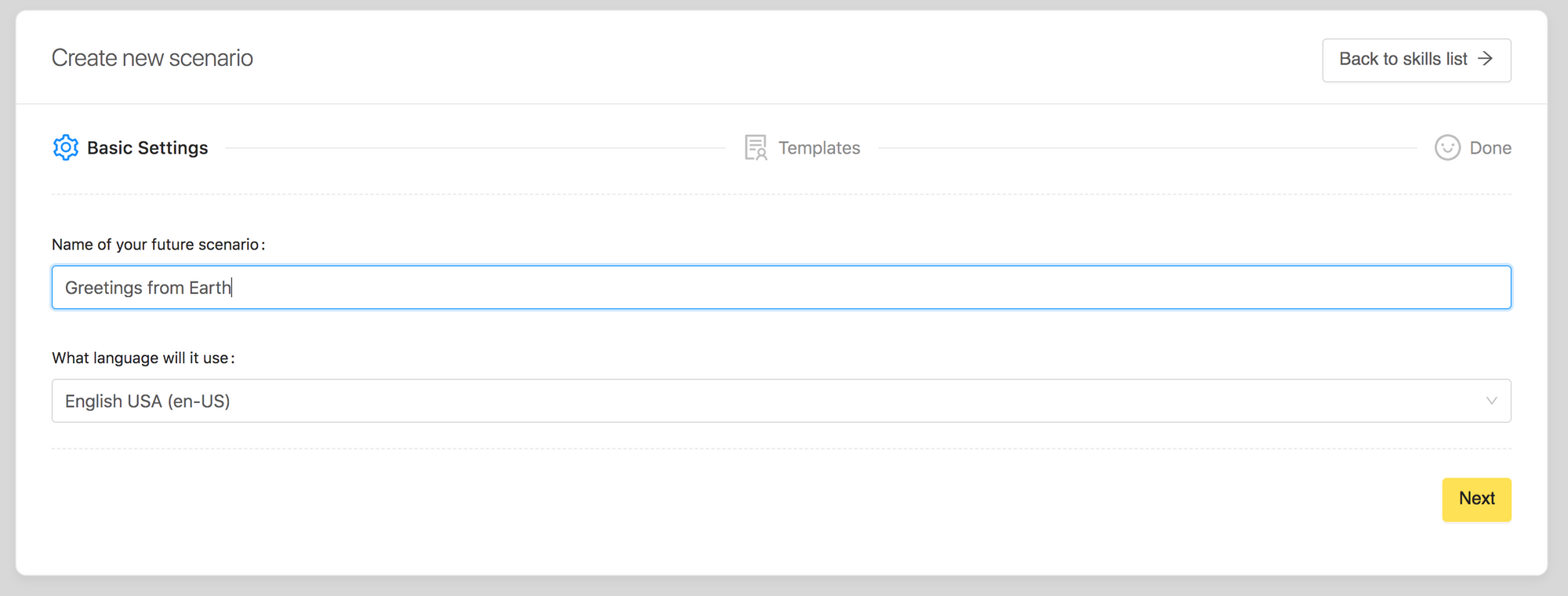

Head over to https://bottalk.de, login and hit + Create a new scenario button. Give your scenario a name - we’ll go with Greetings from Earth - and the language, hit Next button:

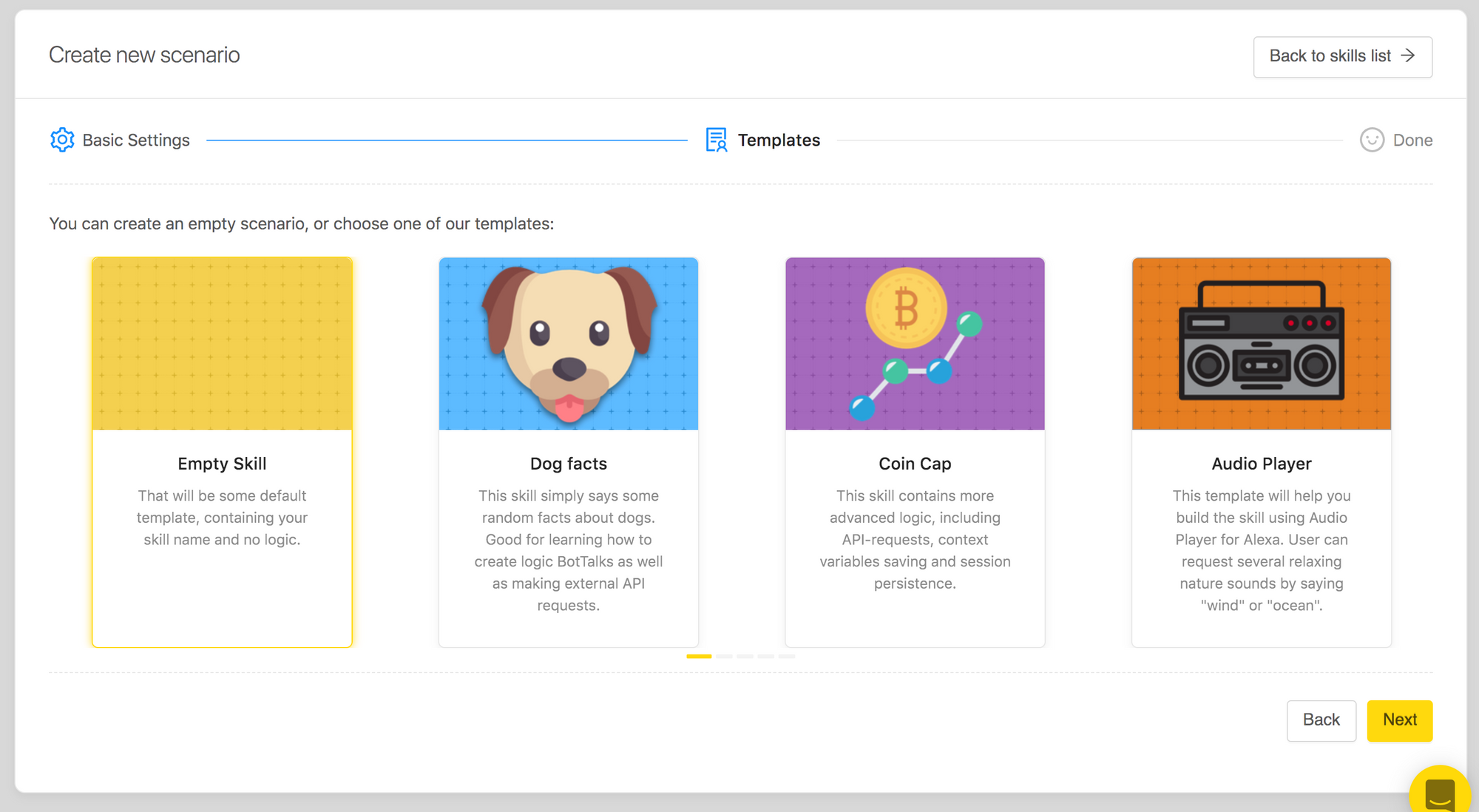

Choose Empty Scenario from the Templates section and hit Next :

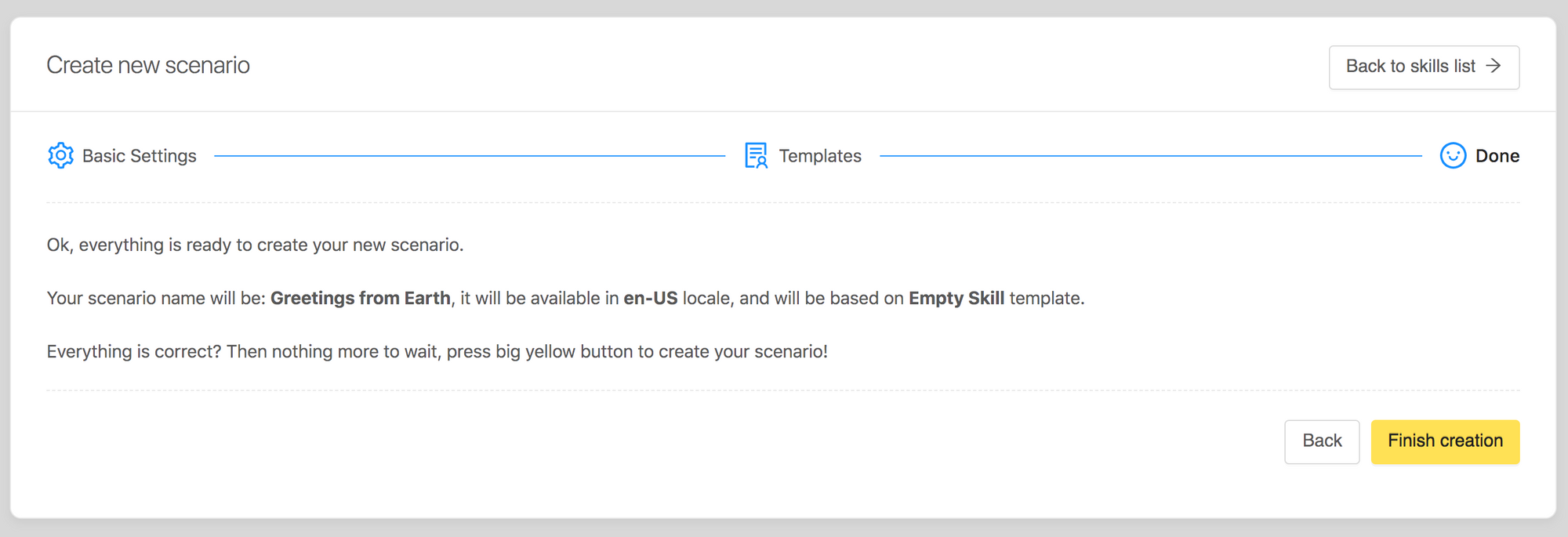

BotTalk will give you the overview of the scenario you are about to create. Confirm by hitting the Finish button:

When you launch you scenario in the editor, there will be a lot of code that was regenerated for you by BotTalk. Don’t be afraid. We’ll get to the nitty-gritty details of everything later. For now, you want your assistant to say “Hello, world!”, remember?

To do that, find the line with steps: text on it, hit enter after this line. And starting from the next line write (or copy and paste) the following:

- name: My first step

actions:

- sendText: Hello, World!

What you did here is create your first step. The step that follows the steps: line will be executed first. You gave your step a name “My first step”. And you’re commanding your Assistant to take some actions. In your case - to send a piece of text using sendText action.

Now hit the Save button and head over to the Test section. Now - be sure you have your volume turned on (and headphones on - if you’re reading this at your office). Because now you’re about to experience something special - how you make your Assistant talk.

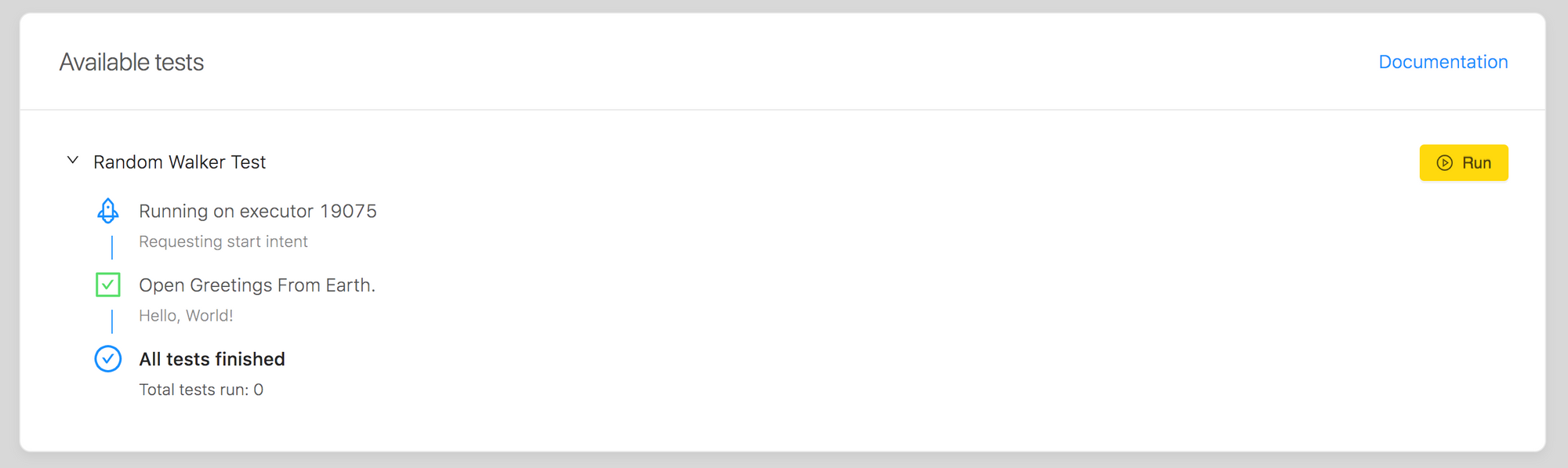

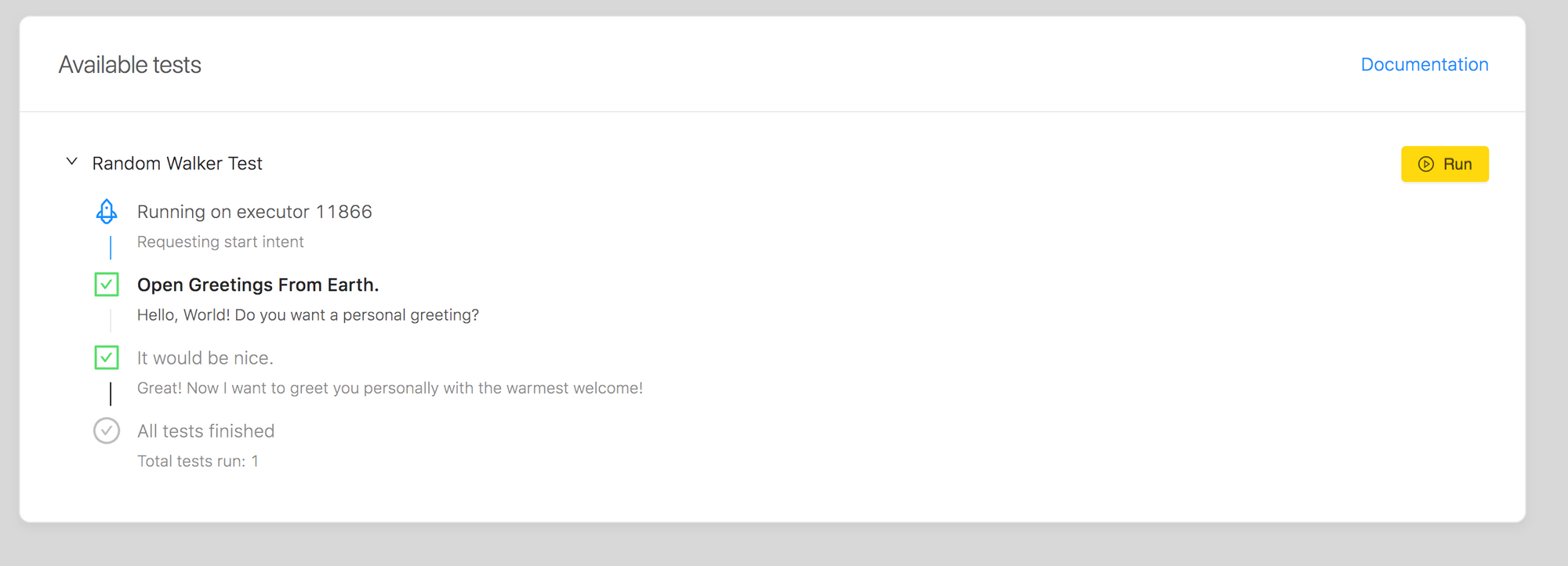

Hit the Run button to start the Random Walker Test and enjoy!

Congratulation! You’ve just created your first Alexa Skill and Google Action!

Source code for this chapter: https://github.com/andremaha/bottalk-course/tree/master/chapter-1

Assistant asks it’s first Question - getInput

Great, now you you have the Assistant talking, the next step would be to ask a user a question. You do that by using an action getInput.

Let’s make some changes to our step to include the getInput action.

- name: My first step

actions:

- sendText: >

Hello, World!

Do you want a personal greeting?

- getInput:

First thing you might have noticed - we put the greater than sign > after sendText action. Normally, your script would break, if you put in the new lines in the sendText action. For example, if you do this in your script

- name: My first step

actions:

# WARNING: Following line will break your scenario!

- sendText: "Hello, World!

Do you want a personal greeting?"

- getInput:

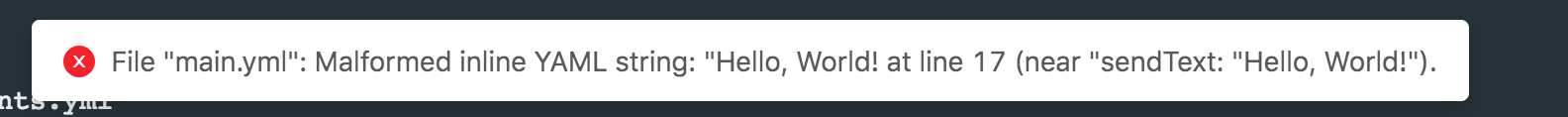

and try to save the scenario you will get the following error:

The > sign would help you out, if you want to write longer texts to maintain the overview.

Now, after we’ve sent the text to the User - we actually want to make our Assistant listen. That’s what getInputdoes. On your devices you will usually see some visual cue. On Alexa that will be a blue ring / line. And on Google Home that would be blinking white dots.

That’s how the Human knows - ok, here is where I come in - it’s time for me to say something.

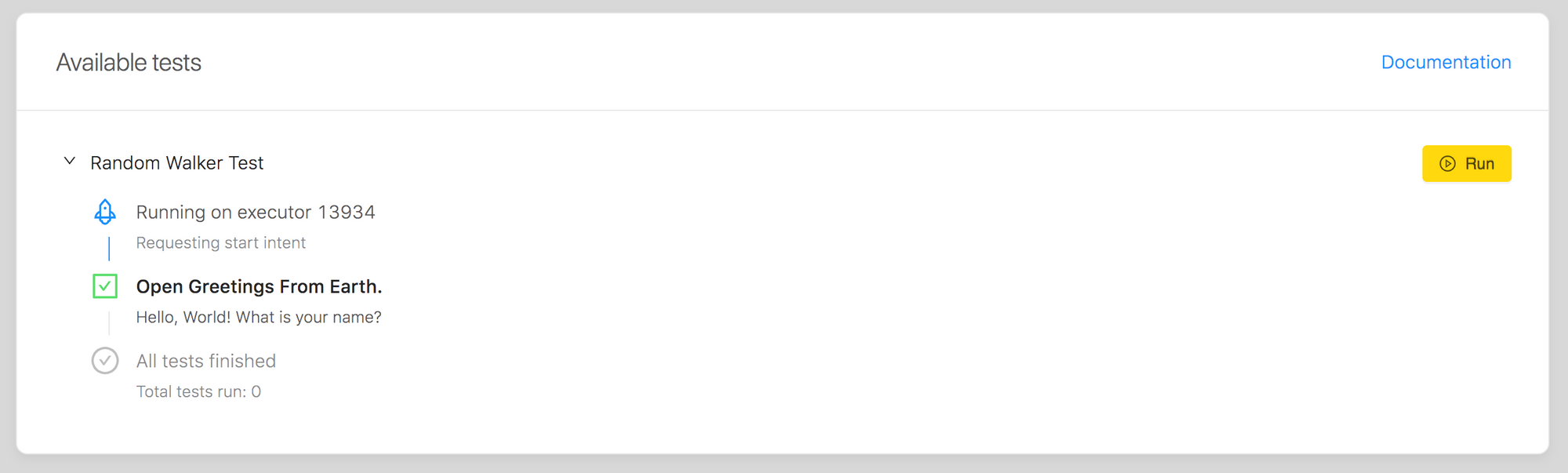

If you launch the Tester now, nothing will change:

Why? Well, although you asked the User a question and even put the Assistant in the “listening” mode with getInput, you still haven’t defined two important things.

- What are you expecting your user to say?

- What would happen next - after she said it?

The answers to those questions are covered in the next chapter…

Source code for this chapter: https://github.com/andremaha/bottalk-course/tree/master/chapter-2

Understanding different ways Humans can say something - intents and utterances

Now you taught your Assistant how to talk and how to listen. Perfect! This part is even more existing. In this chapter you’ll learn how to make your assistant understand.

Let’s take a quick look at the code you’ve written so far:

- name: My first step

actions:

- sendText: >

Hello, World!

Do you want a personal greeting?

- getInput:

So after giving a general “Hello, World!” greeting (with help of sendText action) your Assistant will ask a user if he would want a more personal and maybe even warm welcome. And then an Assistant will switch to the listening mode with getInput action.

Head over to the Intents sections in the editor. You already will have one intent there called ok_great - just to get you started. But we’ll create a new one. Try to imagine what a user might say to the question “Do you want a personal greeting?”

Let’s start small. A user may say yes, for example:

---

intents:

ok_great:

- 'OK'

- 'Great'

yes:

- yes

- sure

- of course

- go for it

- yeah

- I might

- it would be nice

In the code above we defined one intent named yes and just below are all the possible variants how a user might say that (they are called utterances if you want to impress your colleagues).

So someone who doesn’t talk a lot would just say: “Sure”, but there are folks out there who really give an effort and want to be polite, so they would go with something like “It would be nice”.

It’s your job as a designer of a voice app to come up with those variants. As you can imagine, people are different. And so is the way they choose to answer even simplest, straightforward questions. The more variants (utterances) you will provide for each intent, the more likely your voice app will be remembered as a good one.

The Assistants are as “smart” as we’ll make them.

Ok, let’s get back to our script. So now you’ve covered only one possible answer to the question “Do you want a personal greeting?”. Let’s introduce some negativity into our script:

---

intents:

ok_great:

- 'OK'

- 'Great'

yes:

- yes

- sure

- of course

- go for it

- yeah

- I might

- it would be nice

no:

- no

- nope

- never

- just leave me alone

Again, just take brief moment to appreciate how different human no can sound.

That was it for this chapter. You taught your Assistant how to understand some basic answers (and variants) different users can give.

In the next chapter you’ll learn how to teach your Assistant to decide…

Source code for this chapter: https://github.com/andremaha/bottalk-course/tree/master/chapter-3

What happens next - deciding what to do with the Answer

It’s time to take one step further in making our Assistant even more intelligent: let’s create decisions based on what the user said.

To do that in BotTalk you’ll need to use a next section of the step. It does makes sense, if you think about it. Let’s review the process we’ve created so far:

- Assistant welcomes the user and asks something (

sendText) - Assistant switches to listening mode (

getInput) - We’ve configured possible answers

What’s next?

Well, you now you need to connect the possible answer with the next step your Assistant will go to:

- name: My first step

actions:

- sendText: >

Hello, World!

Do you want a personal greeting?

- getInput:

next:

# If a user says yes (see Intents for details)

yes: Personal Greeting

# If a user says no

no: General Greeting

- name: Personal Greeting

actions:

- sendText: >

Great! Now I want to greet you personally with the

warmest welcome!

- name: General Greeting

actions:

- sendText: >

As you wish. No personal greeting for you then.

So if a user would say something like “go for it”, or “sure”, or “it would be nice” - basically anything that you defined in the last chapter as a variant (or utterance) of the yes intent - THEN an Assistant will go to the Personal Greeting step and will read this text out loud:

Great! Now I want to greet you personally with the warmest welcome!

If, on the other hand, a user doesn’t want a personal greeting and reacts to the questions with “just leave me alone” or just “no”, the next step an Assistant will jump to will be General Greeting:

As you wish. No personal greeting for you then.

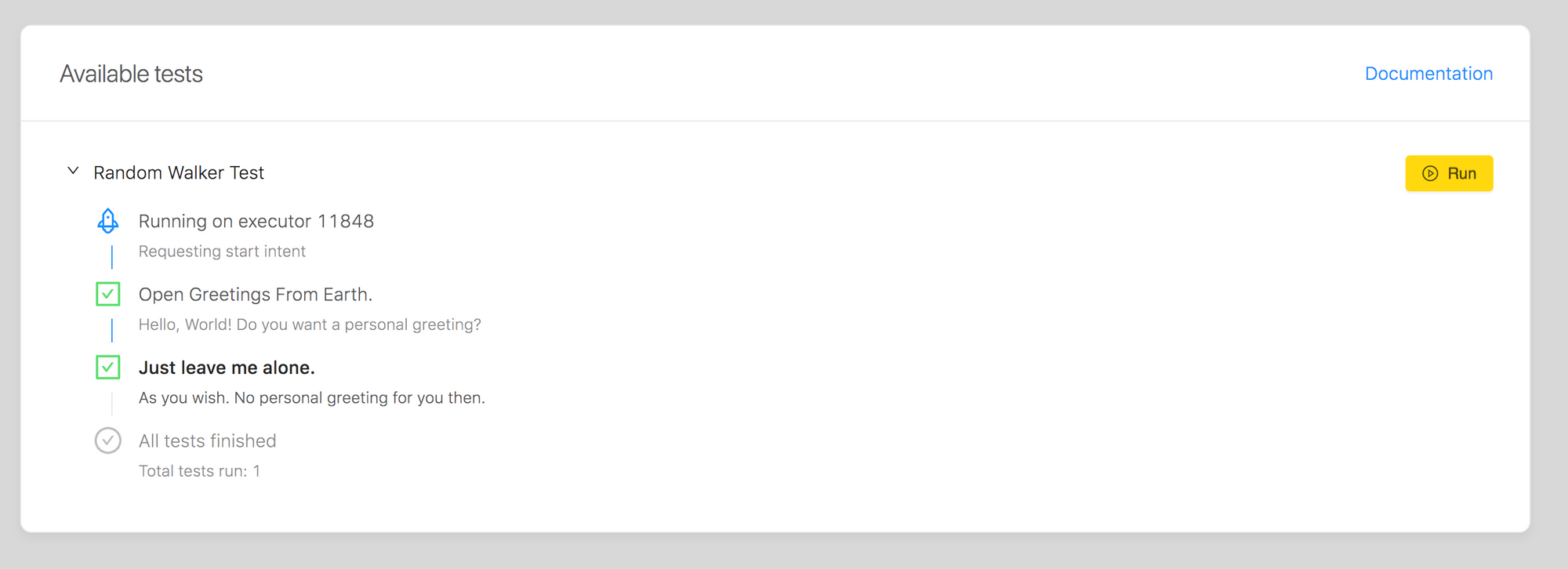

So far so good? Let’s hear this logic in action! Go to the Test tab and hit the Run button:

As you can see in the screenshot, BotTalk’s Random Walker Test chose a negative answer to the question. You can rerun the test multiple times to get it to choose another answer:

Just take a listen, isn’t it exciting? You just taught your assistant to decide!

Congratulations! You finished the forth chapter. Go ahead and create couple of steps and play around. The best way to learn is to experiment!

Source code for this chapter: https://github.com/andremaha/bottalk-course/tree/master/chapter-4